Camera, radar and lidar sensors give autonomous vehicles superhuman vision.

To drive better than humans,

Autonomous vehicles must first see better than humans.

Building reliable vision capabilities for self-driving cars has been a major development hurdle. By combining a variety of sensors,

However, developers have been able to create a detection system that can “see”

A vehicle’s environment even better than human eyesight.

The keys to this system are diversity different types of sensors and,

Redundancy overlapping sensors that can verify that what a car is detecting is accurate.

The three primary autonomous vehicle sensors are camera, radar, and LIDAR.

Working together, they provide the car visuals of its surroundings and;

Help it detects the speed and distance of nearby objects,

As well as their three-dimensional shape.

Also, sensors known as inertial measurement,

Units help track a vehicle’s acceleration and location.

To understand how these sensors work on a self-driving car and;

Replace and improve human driving vision let’s start by,

Zooming in on the most commonly used sensor, the camera.

The Camera Never Lies

From photos to video, cameras are the most accurate way to

Create a visual representation of the world,

Especially when it comes to self-driving cars.

Autonomous vehicles rely on cameras placed on every side front,

Rear, left and right to stitch together a 360-degree view of their environment. Some have a wide field of view — as much as 120 degrees — and a shorter range. Others focus on a narrower view to provide long-range visuals.

Some cars even integrate fish-eye cameras,

Which contain super-wide lenses that provide a panoramic view,

To give a full picture of what’s behind the vehicle for it to park itself.

Though they provide accurate visuals, cameras have their limitations.

They can distinguish details of the surrounding environment,

However, the distances of those objects need to be calculated to know exactly where they are. It’s also more difficult for camera-based sensors to detect objects in

Low visibility conditions, like fog, rain or nighttime.

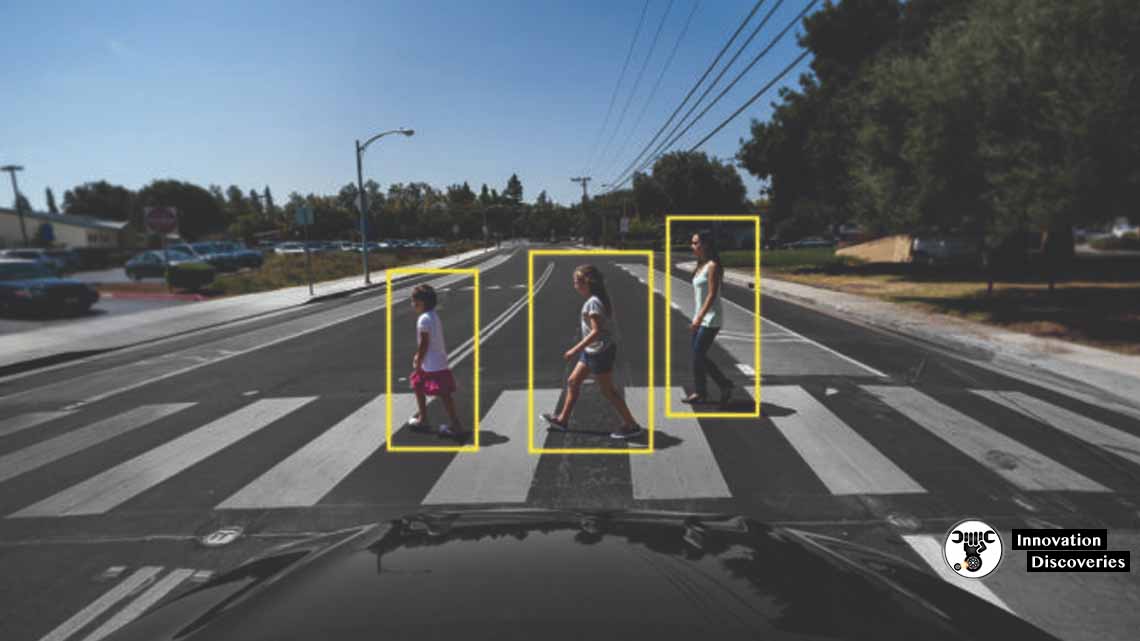

Perceive objects in their environment

On the Radar

Radar sensors can supplement camera vision in times of low visibility,

Like night driving, and improve detection for self-driving cars.

Traditionally used to detect ships, aircraft and weather formations,

Radar works by transmitting radio waves in pulses.

Once those waves hit an object, they return to the sensor,

Providing data on the speed and location of the object.

Highly accurate radar sensing technology.

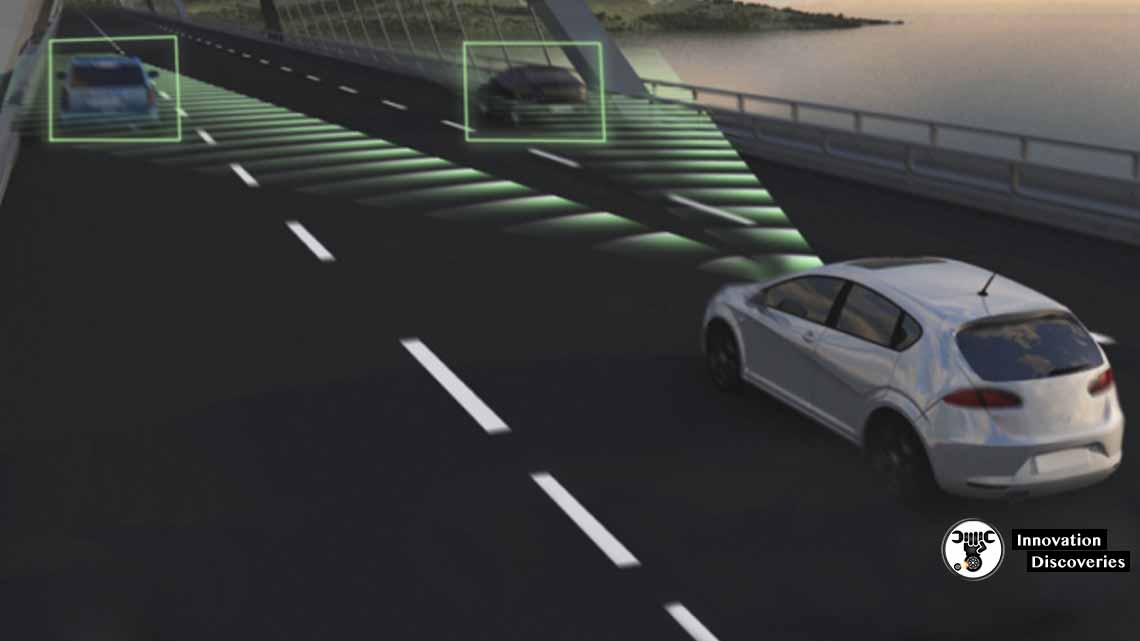

Like the vehicle’s cameras, radar sensors typically surround the car to;

Detect objects at every angle. They’re able to determine speed and distance,

However, they can’t distinguish between different types of vehicles.

While the data provided by surround radar and camera are;

Sufficient for lower levels of autonomy,

They don’t cover all situations without a human driver. That’s where lidar comes in.

Laser Focus

Camera and radar are common sensors: most new cars,

Today already use them for advanced driver assistance and park assist.

They can also cover lower levels of autonomy

When a human is supervising the system.

However, for full driverless capability, lidar a sensor that measures distances by

Pulsing lasers have proven to be incredibly useful.

Lidar makes it possible for self-driving cars to have a 3D view of their environment.

It provides shape and depth to surrounding cars and pedestrians,

As well as road geography. And, like radar, it works just as well in low-light conditions.

By emitting invisible lasers at incredibly fast speeds,

Lidar sensors can paint a detailed 3D picture from the signals that bounce back instantaneously. These signals create “point clouds” that represent the vehicle’s

Surrounding environment to enhance safety and diversity of sensor data.

Vehicles only need lidar in a few key places to be effective.

However, the sensors are more expensive to implement,

as much as 10 times the cost of camera and radar and have a more limited range.

Putting It All Together

Camera, radar and lidar sensors provide rich data about the car’s environment.

However, much like the human brain processes visual data taken in by the eyes,

An autonomous vehicle must be able to make sense of this constant flow of information.

Self-driving cars do this using a process called sensor fusion.

The sensor inputs are fed into a high-performance, centralized AI computer,

Such as the NVIDIA DRIVE AGX platform,

Which combines the relevant portions of data for the car to make driving decisions.

So rather than rely just on one type of sensor data at specific moments,

Sensor fusion makes it possible to fuse various information from the sensor suite,

Such as shape, speed, and distance to ensure reliability.

It also provides redundancy. When deciding to change lanes,

Receiving data from both camera and radar sensors before moving into the

Next lane greatly improves the safety of the maneuver,

Just as current blind-spot warnings serve as a backup for human drivers.

The DRIVE AGX platform performs this process as the car drives,

So it always has a complete, up-to-date picture of the surrounding environment.

This means that unlike human drivers,

Autonomous vehicles don’t have blind spots and are always vigilant of the

Moving and changing world around them

Read More: